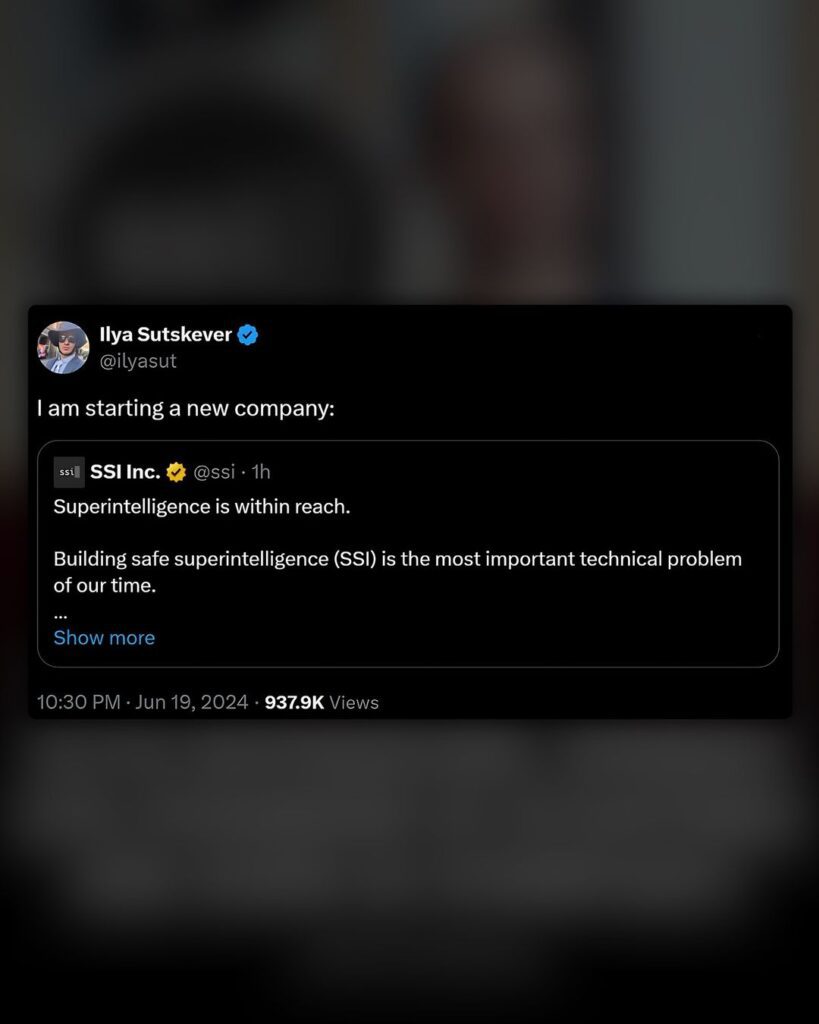

Ilya Sutskever, co-founder and former chief scientist of OpenAI, has launched a new AI company, Safe Superintelligence, aimed at creating superintelligent machines safely. The startup, co-founded with former Apple AI expert Daniel Gross and ex-OpenAI colleague Daniel Levy, will focus exclusively on developing superintelligence without releasing other products, according to spokeswoman Lulu Cheng Meservey.

Sutskever, who regretted his role in the ousting of former OpenAI CEO Sam Altman last November, left OpenAI last month. The new venture’s funding sources and raised amounts remain undisclosed.

In 2022, OpenAI’s release of ChatGPT revolutionized generative AI, influencing various tech sectors. However, internal conflicts led to Altman’s brief removal, later reversed due to staff protests. Sutskever’s concerns over AI’s potential dangers had also prompted the formation of OpenAI’s Superalignment team, aimed at preventing AI harm.

Following his departure, Sutskever’s new venture reflects his ongoing commitment to safe AI development. Meanwhile, Jan Leike, another key figure from the Superalignment team, has joined competitor Anthropic.