Nvidia delivered one of the strongest quarters in corporate history, yet the stock was thrown into a fresh storm of AI bubble fears, violent price swings and macro worries.

Below is a full breakdown of what actually happened in the numbers, what Jensen Huang is telling investors about the future of AI, and why Wall Street is both impressed and nervous at the same time.

1. A quarter that crushed expectations

Fiscal Q3 2026 was another record for Nvidia (NVDA). The company did not just beat; it cleared the bar with room to spare.

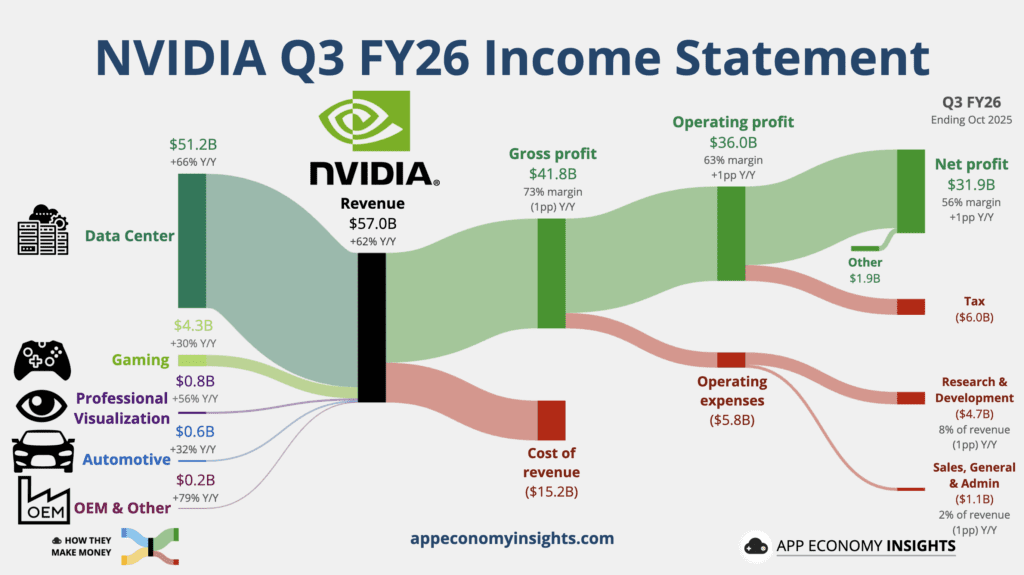

- Revenue: 57.01 billion dollars, ahead of estimates around 55 billion dollars, up about 62 percent year over year and more than 20 percent quarter over quarter.

- Adjusted EPS: 1.30 dollars, slightly ahead of expectations, up about 60 percent versus last year and more than 20 percent versus last quarter.

- GAAP net income: 31.91 billion dollars, up about 65 percent from the prior year.

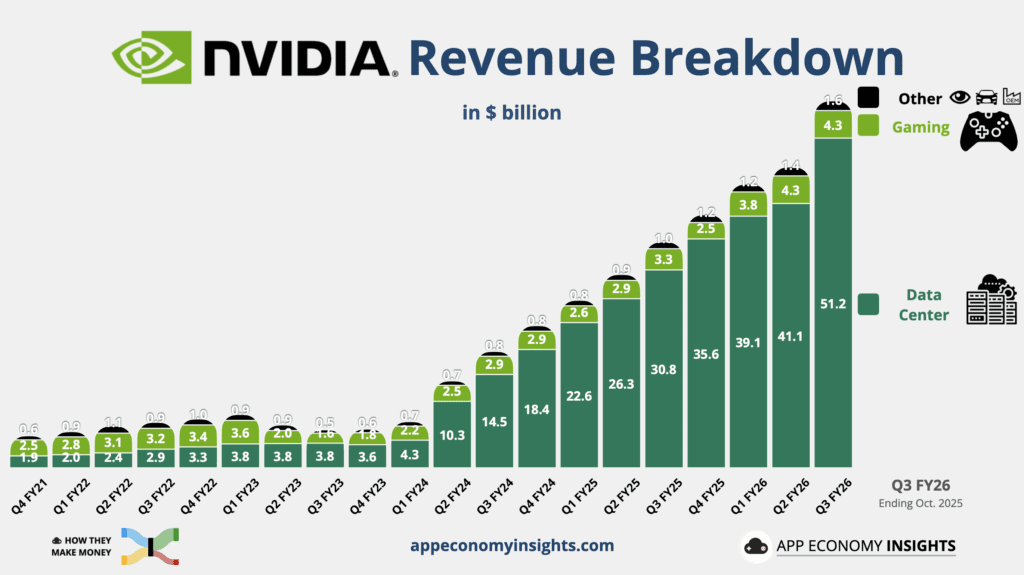

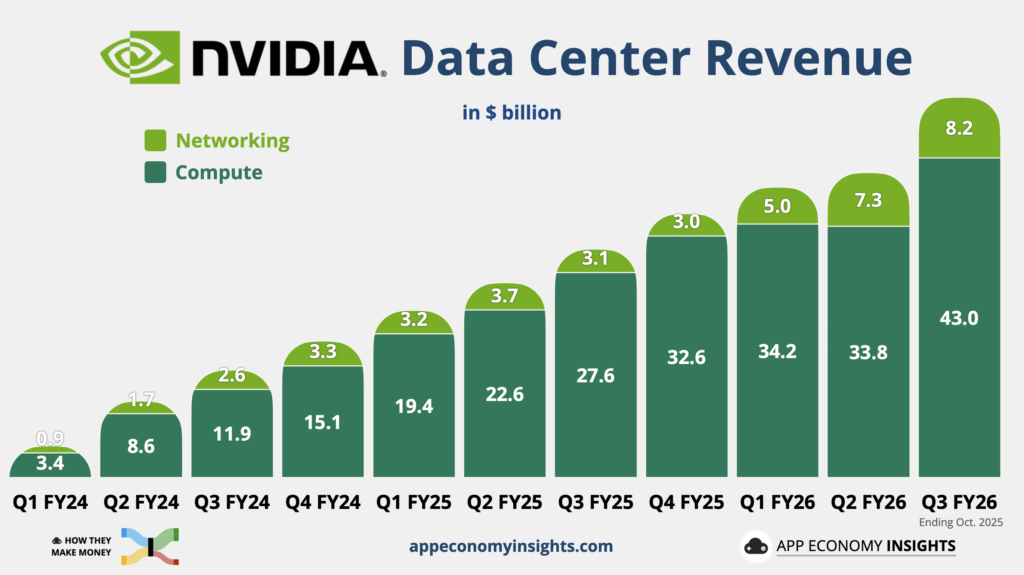

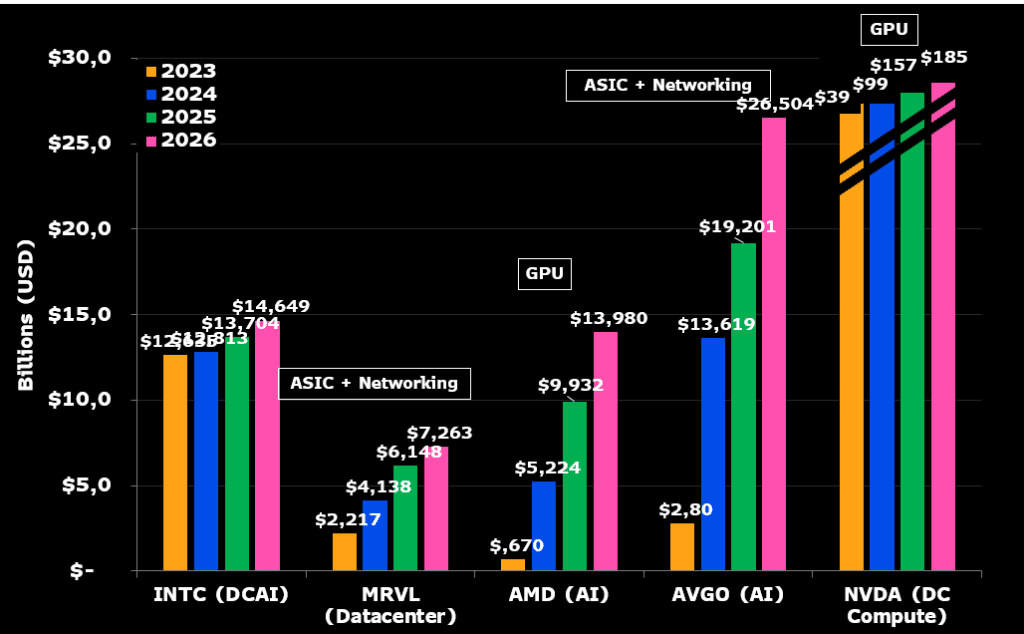

The data center segment was the engine behind everything:

- Data center revenue: 51.2 billion dollars, up roughly two thirds from a year ago and about one quarter from Q2, beating every major Wall Street forecast.

- Around 43 billion dollars came from compute, mainly GPUs.

- About 8.2 billion dollars came from high speed networking gear that lets those GPUs act as one giant computer.

- Management said demand for its current generation Blackwell and GB300 chips is “off the charts” and that cloud GPUs are effectively sold out.

- The best selling product family is already Blackwell Ultra, the second generation of Blackwell.

Other segments were smaller but still solid:

- Gaming: 4.3 billion dollars, about 30 percent higher than a year ago, essentially flat versus last quarter and a touch below consensus.

- Professional visualization: 760 million dollars, up more than 50 percent year over year and about one quarter sequentially, ahead of expectations.

- Automotive and robotics: 592 million dollars, up roughly one third year over year and slightly higher than Q2.

Margins stayed exceptional. Adjusted gross margin was around 74 percent, with adjusted operating income close to 38 billion dollars and adjusted net income almost 32 billion dollars. Free cash flow reached about 22 billion dollars, up more than 30 percent from a year ago.

For the current quarter, Nvidia guided to about 65 billion dollars of revenue, plus or minus 2 percent, clearly higher than the roughly 62 billion dollars analysts were expecting. Guided non GAAP gross margins are set to edge higher again to about 75 percent.

In simple language, the core business is still exploding.

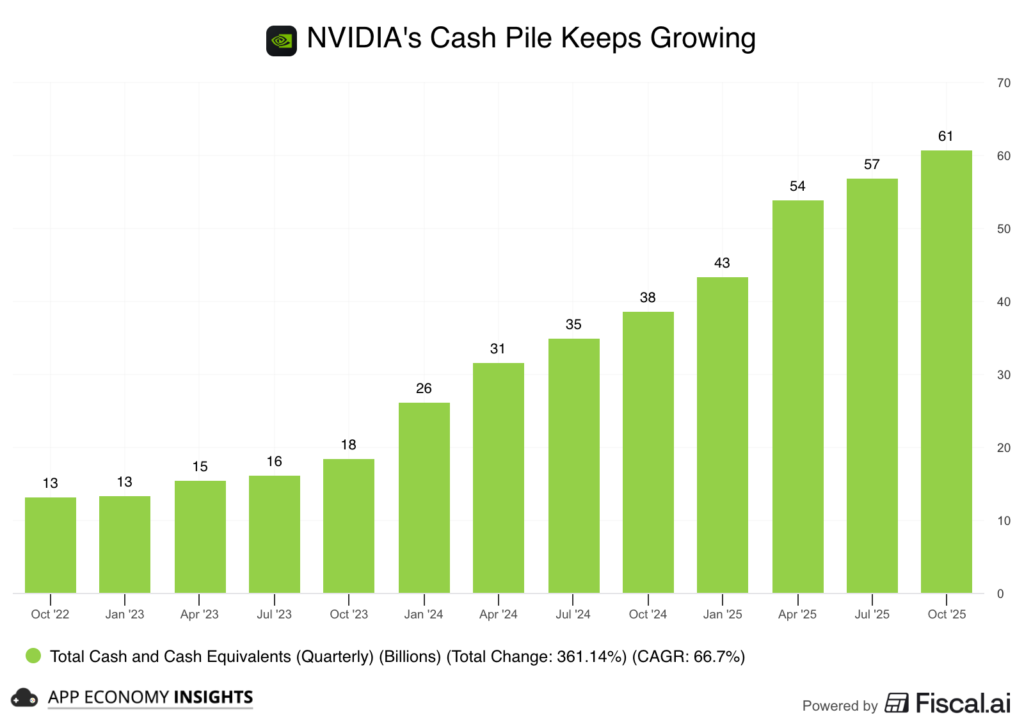

2. Balance sheet, cash returns and a giant inventory build

The cash machine behind those earnings is getting bigger and more aggressive.

- Cash, cash equivalents and marketable securities rose to 60.6 billion dollars, up from 38.5 billion dollars a year earlier.

- Operating cash flow was 23.8 billion dollars in the quarter, compared with 17.6 billion dollars a year ago and 15.4 billion dollars in the prior quarter.

At the same time, Nvidia is clearly building ahead for the next wave of AI demand:

- Inventory climbed to 19.8 billion dollars, up from 15.0 billion dollars just one quarter earlier.

- Total supply related commitments reached 50.3 billion dollars. The finance team explained that this is about securing long lead time components, meeting demand for Blackwell and supporting future architecture ramps.

- Multi year cloud service agreements rose to 26.0 billion dollars, from 12.6 billion dollars in Q2, showing that hyperscalers are locking in capacity years in advance.

Shareholders are being paid very well for all of this:

- Nvidia has already returned 37 billion dollars in the first nine months of fiscal 2026 through buybacks and dividends.

- There is still 62.2 billion dollars left under the current repurchase authorization.

- The quarterly dividend remains symbolic at one cent per share, which makes clear that management prefers buybacks.

In Q3 alone, the company spent 12.5 billion dollars on share repurchases and about 243 million dollars on dividends.

3. Blackwell, performance per watt and the power problem

The star of the show is still Nvidia’s data center platform, especially the Blackwell family of GPUs and the full stack around them.

On the earnings call and in later comments, Jensen Huang kept returning to one phrase: performance per watt. Data centers face hard limits on how much power they can draw. Huang’s message was direct:

You still only have one gigawatt of power, so performance per watt translates directly to your revenues.

According to Nvidia:

- Blackwell based systems, including mixture of experts reasoning models, can deliver around ten times higher performance per watt and roughly ten times lower cost per token than the previous H200 generation in some benchmarks.

- Internal tests such as DDC R1 over NVLink show Blackwell as a huge generational leap for inference as well as training.

This is also how Nvidia responds to critics like Michael Burry, who point out that older A100 GPUs use two to three times more power per unit of compute than newer chips and question whether they still create real economic value, especially when Nvidia itself says Blackwell is far more energy efficient.

Huang effectively answered that challenge with a single line from the transcript:

The A100 GPUs we shipped six years ago are still running at full utilization today, now powered by a much stronger software stack.

In other words, Nvidia argues that the combination of CUDA software, networking and system design keeps extending the economic life of those older chips, even as new generations roll in.

There is also the question of constraints. When an analyst asked whether power, financing, memory or foundry capacity could limit Nvidia’s growth, Huang’s answer was that all of them are constraints, and none of them are fatal.

He stressed that:

- Nvidia has more than three decades of relationships across the supply chain.

- The company now has partners for land, power, data center shells and financing.

- Careful planning both up and down the supply chain is key so that every unit of energy produces as much revenue as possible.

- Because Nvidia designs the full stack, from chips to software, each generation is meant to deliver better performance per watt and higher value per dollar for customers, not just more raw speed.

Key quotes from the earnings call

CFO Colette Kress:

On demand and long-term visibility:

“We currently have visibility to $500 billion in Blackwell and Rubin revenue from the start of this year through the end of calendar year 2026. […] We believe NVIDIA will be the superior choice for the $3 trillion-$4 trillion in annual AI infrastructure build we estimate by the end of the decade. Demand for AI infrastructure continues to exceed our expectations.”

This anchors the growth looking forward and frames the $500 billion Blackwell–Rubin pipeline inside a multi-trillion-dollar decade-long build-out.

CEO Jensen Huang:

On power limits and performance per watt:

“In the end, you still only have one gigawatt of power, one gigawatt data centers, one gigawatt of power. Therefore, performance per watt, the efficiency of your architecture, is incredibly important. […] Your performance per watt translates directly to your revenues, which is the reason why choosing the right architecture matters so much now.”

Power is the binding constraint, and that perf-per-watt is the real battleground for economics that could favor NVIDIA over the long haul.

On the ecosystem and running every model:

“NVIDIA’s architecture, NVIDIA’s platform is the singular platform in the world that runs every AI model.[…] We run OpenAI. We run Anthropic. We run xAI […] We run Gemini […] We run science models, biology models, DNA models, gene models, chemical models […] AI is impacting every single industry.”

Huang’s ecosystem argument is simple: one architecture, every major model, across consumer apps, enterprises, and science. It reinforces CUDA as the default AI operating layer.

4. Three platform shifts and the AI bubble debate

A huge piece of this quarter was Huang trying to reframe the entire AI bubble conversation.

In both the call and a separate interview he pushed back on the idea that this is just speculative froth. He said the world is going through three simultaneous platform shifts:

- From CPU based general purpose computing to GPU accelerated computing.

A gigantic base of traditional software for data processing and scientific simulation is moving to CUDA GPUs as Moore’s Law slows. That spending already runs into hundreds of billions of dollars each year and used to live mainly on CPUs. - From classical machine learning to generative AI inside existing applications.

Huang pointed to Meta as a live example. Its ad ranking and recommendation systems, trained on large GPU clusters, helped drive more than a five percent increase in ad conversions on Instagram and around a three percent improvement in the Facebook feed. For hyperscalers, those small percentages translate into very real revenue, not just marketing slogans. - From today’s models to agentic and physical AI.

This includes reasoning systems and real world tools such as coding assistants like Cursor and Cloud Code, radiology tools like iDoc, legal assistants such as Harvey, self driving platforms like Tesla FSD and Waymo, and a growing wave of industrial and service robots.

His message to investors was simple. The AI buildout is not one narrow craze. It is three overlapping structural shifts that will require huge infrastructure for many years. Nvidia’s pitch is that one unified architecture, spanning cloud, enterprise and robotics, allows it to serve all three waves.

On the call, he opened by saying there has been a lot of talk about an AI bubble, then added that from Nvidia’s vantage point, they see something very different.,

5. Inside the company: “the market did not appreciate it”

The public call was only part of the story. In a leaked all hands meeting on Thursday, Huang spoke even more bluntly to employees.

He told staff that “the market did not appreciate” what he called an incredible quarter and said Nvidia was in a kind of no win situation in the current debate.

- If Nvidia had delivered a weak quarter, critics would shout that this proves an AI bubble.

- If Nvidia delivered a strong quarter, the same people would say the company is fueling that bubble.

Huang joked about the memes showing Nvidia “holding the planet together” and added that this was “not untrue.” He also reminded employees that the company had briefly been worth about five trillion dollars and that “nobody in history has ever lost five hundred billion in a few weeks” without still being incredibly valuable.

The internal message was clear: keep building, let the stock market worry about daily mood swings.

6. Customers, model builders and early proof of value

One of Nvidia’s strongest arguments against the bubble narrative is what its customers are actually doing. Management spent a lot of time highlighting real-world outcomes. The past quarter was a blur of mega-partnerships, each one expanding NVIDIA’s reach deeper into the AI stack.

On the model builder side:

- OpenAI is working with NVIDIA to build and deploy at least 10 GW of AI data centers. NVIDIA plans to take an equity stake and invest up to $100 billion over time as part of the multi-year buildout.

- Anthropic signed a deep platform deal to run up to 1 gigawatt of Grace Blackwell and Rubin systems, alongside a planned $10 billion investment from NVIDIA. Anthropic will purchase $30 billion of compute from Azure and collaborate with NVIDIA on model training and hardware optimization, turning a prior non-customer into a full-stack NVIDIA partner.

- xAI: xAI is building gigawatt-scale Colossus 2 AI factories anchored on Blackwell, including a 500 MW flagship site with Humane. AWS will supply up to 150,000 NVIDIA accelerators to power these workloads.

- Saudi Arabia (KSA): Framework agreement for roughly 400,000 to 600,000 GPUs over three years.

- Palantir: Bringing CUDA X into Ontology, with customers like Lowe’s already using it for supply chain and analytics workflows.

- Fujitsu, Intel, and Arm: Announced NVLink integrations that wire their CPU roadmaps directly into NVIDIA’s ecosystem.

On the enterprise and industry side:

- RBC is using agentic AI to cut the time needed to produce analyst reports from hours to minutes.

- Unilever has doubled content creation speed while cutting costs in that area by about half.

- Salesforce engineers using tools like Cursor are seeing at least thirty percent productivity gains.

Nvidia also stressed that physical AI is already a multibillion dollar business. Companies such as Belden, Caterpillar, Foxconn, Lucid, Toyota, TSMC, Wistron, Agility Robotics, Amazon Robotics, Figure and Skild AI are building robots and automated systems on Nvidia platforms. Huang described this as a multi trillion dollar opportunity over time.

For Nvidia, these examples are proof that AI is not just a line item in hyperscaler capital expenditure budgets. It is starting to show up in productivity, cost savings and entirely new products.

7. China: forecast set to zero, optional upside if rules change

One of the more striking details this quarter was how little Nvidia currently relies on China.

Over the past year the company fought to obtain licenses for its H20 chip, a slowed down version of older technology that complies with United States export rules. At one point some analysts thought China could be a fifty billion dollar per year market for Nvidia.

The company eventually secured licenses after Huang personally met President Donald Trump and agreed to a structure in which the United States government would take fifteen percent of China related sales.

In practice, the result so far is tiny. Colette Kress said:

- H20 revenue in the quarter was about fifty million dollars, which she described as insignificant.

- Large purchase orders never materialized, partly due to geopolitical uncertainty and partly because local competitors have been gaining ground.

Because of this, Nvidia now assumes zero contribution from China data center sales in its forecast. Huang said he would love to re engage with China if rules change and that it would be fantastic to participate in that market again, but investors are being told not to rely on that outcome.

That makes the current growth even more striking. These record numbers are being delivered without meaningful help from what used to be one of Nvidia’s biggest markets.

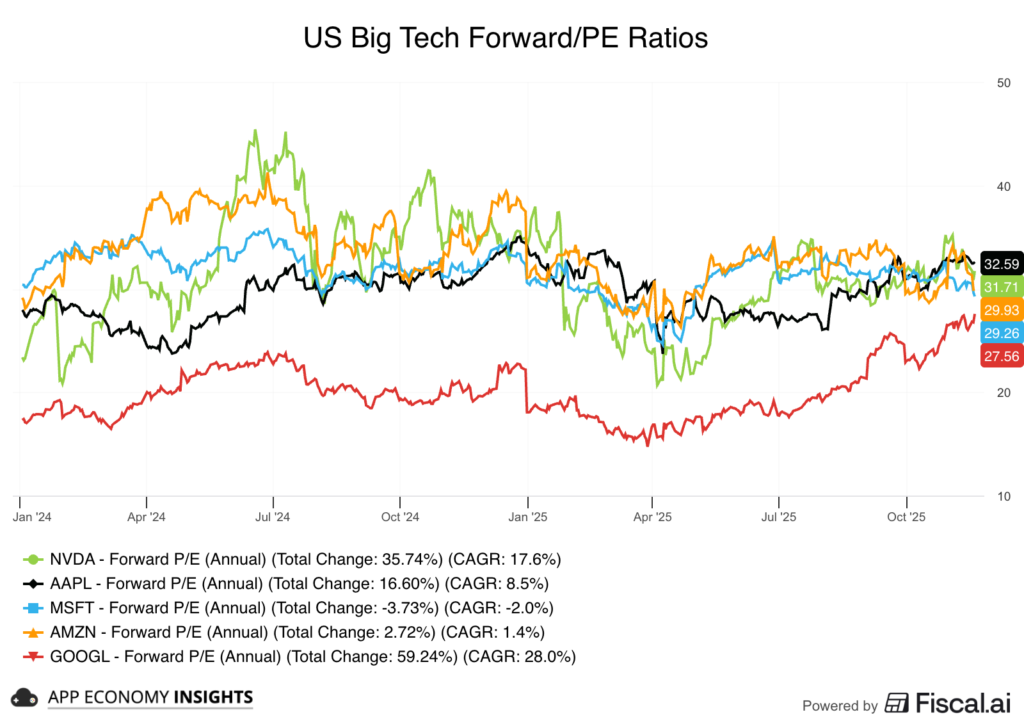

8. Wall Street reaction: still “THE AI company”

Despite the stock’s choppy trading, professional analysts came away even more bullish.

- Truist described Nvidia as “THE AI company” and said there is still significant growth and stock upside ahead. They raised their calendar 2026 EPS estimate from 6.50 dollars to 7.30 dollars and lifted their price target from 228 dollars to 255 dollars, using a multiple of around thirty five times earnings.

- Dan Ives at Wedbush called it a “drop the mic quarter” and said this was the earnings call that tech bulls needed to hear.

- UBS argued that the AI infrastructure tide is rising so quickly that almost all boats will lift, and that Nvidia is actually tightening its grip as the central enabler of AI across text, video and other modalities.

Across the Street, price targets moved sharply higher. Recent revisions include:

- Evercore ISI now at 352 dollars, up from 261 dollars, the highest widely quoted target.

- Melius, KGI, Baird, Barclays, Bernstein, Citi, BNP, JPMorgan, Stifel, KeyBanc, Wolfe, Jefferies, Goldman, Rosenblatt, Mizuho, William Blair, CITIC, Deutsche Bank and Morningstar all raised their targets, many into the 240 to 275 dollar range.

The overall picture:

- The median target has shifted from about 230 dollars to roughly 250 dollars.

- Even the lowest major target has climbed from around 180 dollars to about 230 dollars.

In short, Wall Street research generally sees Nvidia as the clear winner in AI and expects earnings to keep growing very rapidly.

At the same time, even supportive firms such as Bank of America and Saxo Bank highlight important risks, including customer concentration, heavy use of debt financed capital expenditure by model builders, and the physical constraints around power and data center capacity.

9. Why the stock still sold off and what the bears are worried about

If the numbers were so strong, why did NVDA soar then slump, dragging the entire AI basket with it and helping to trigger one of the wildest two day swings in the S&P 500 this year?

A few themes keep coming up in more cautious research and opinion pieces.

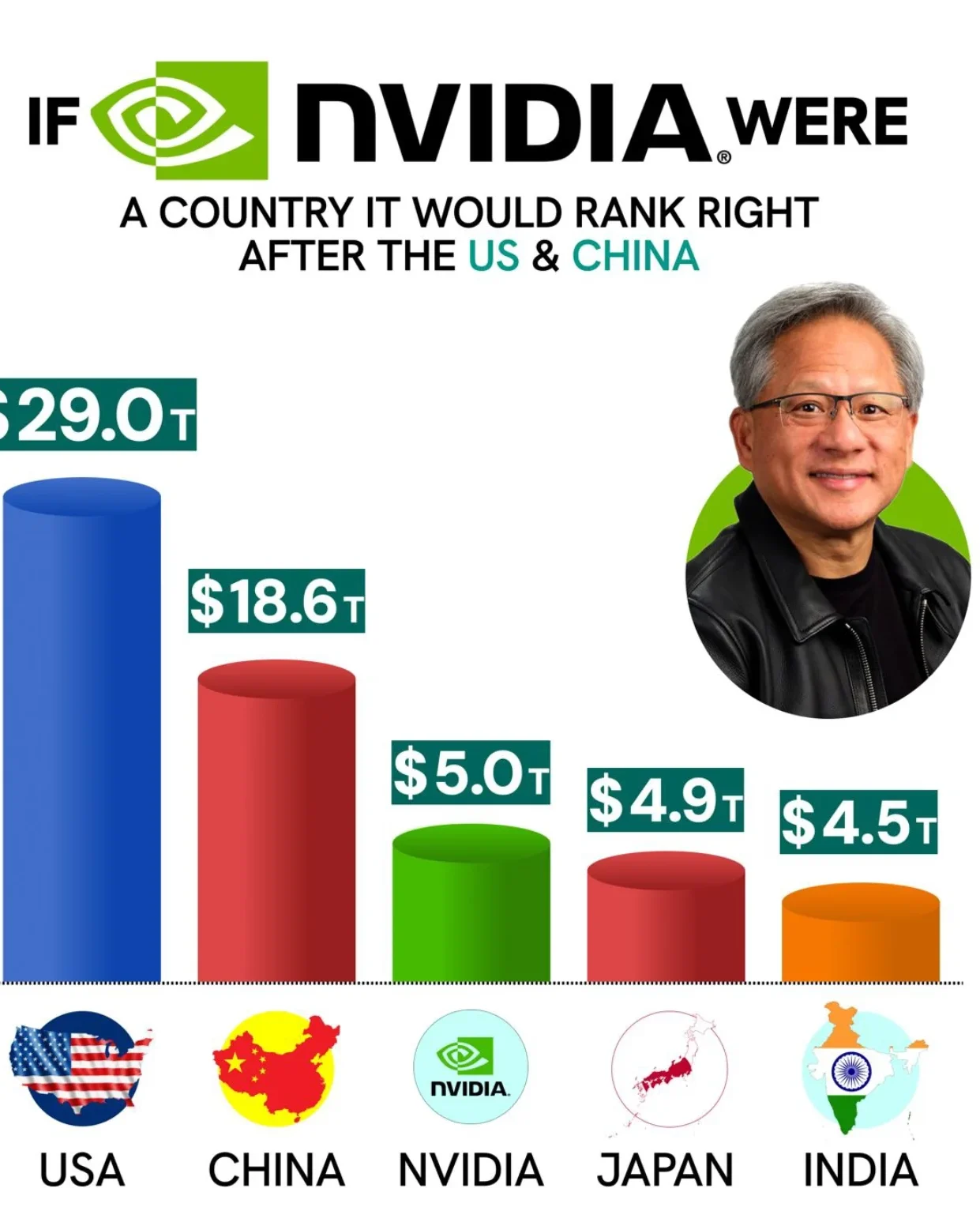

Valuation and crowded positioning

Nvidia’s market value is around 4.5 trillion dollars and the stock had become the ultimate AI momentum trade. Many hedge funds and retail traders were heavily overweight. When macro worries flared and sentiment gauges such as the Fear and Greed Index slid back into extreme fear, even a near perfect quarter struggled to push the stock higher.

Customer concentration and debt financed capex

A large part of Nvidia’s demand comes from a small group of hyperscalers and model builders that are committing huge sums to data centers, often with rising leverage. Bank of America and others note that more AI infrastructure is being financed with debt. Critics worry that if revenue growth slows, some of those customers may have trouble justifying their spending plans.

Physical and political constraints

Data centers require land, power, cooling and local approvals. Several commentators point out that power shortages, grid bottlenecks and community resistance could eventually slow AI buildouts. That creates a new category of risk that is partly economic and partly political.

Skepticism about real world value

Investors such as Michael Burry argue that high utilization of older chips does not automatically mean high profitability, especially once energy costs and depreciation are taken into account. In this view, some AI projects could turn out to be expensive science experiments if monetization lags too far behind spending.

These worries were visible in the trading after the report. Nvidia’s stock initially jumped in extended hours, then reversed sharply, finishing the next main session down around 3 percent. The selloff spilled into the wider AI complex:

- Semiconductor indices fell as stocks like Advanced Micro Devices dropped sharply.

- Pure play AI software firms such as C3.ai suffered even steeper declines and are now down more than 25 percent over the past month.

- Fund managers rotated money into defensive sectors such as healthcare, which has been one of the best performers this month, while technology has been the weakest group in the S&P 500.

Fortune and other outlets captured the mood with a simple idea: Nvidia was being punished in the market precisely because its report was so strong, reigniting anxiety that the AI boom is too good to last.

10. What this quarter really tells us about the AI cycle

Putting everything together, a few clear conclusions stand out.

AI infrastructure demand is still accelerating.

Data center revenue growth above 60 percent, a Q4 guide that implies another step up and a backlog of about 500 billion dollars of AI chip orders for 2025 and 2026 all point to a multi year buildout that is far from finished. Management says that forecast is still on track and that recent deals with partners like Anthropic and Saudi Arabia are not yet fully reflected, so the number is likely to increase.

Nvidia is deepening its moat rather than just riding a bubble.

The CUDA software ecosystem, the long usable life of GPUs such as A100, aggressive investment in networking and a unified architecture that spans cloud, enterprise and robotics all reinforce Nvidia’s central role in AI. Customer case studies and model builder economics show that at least some of this spending is already translating into productivity gains and new revenue streams.

The main risks sit around Nvidia, not inside it.

The biggest uncertainties involve power, regulation, financing and the ability of Nvidia’s customers to generate enough profit to justify their massive capital expenditure plans. Those are real issues, yet so far they have not slowed orders, which is why Nvidia continues to build inventory and sign multi year commitments at record levels.

Markets are trading on emotion as much as on math.

This was the “drop the mic” quarter that many tech bulls were waiting for. Analysts raised their targets and reiterated buy ratings. At the same time, the stock became the focal point of one of the sharpest sentiment swings of the year, with the same numbers used to argue both that AI is the future and that AI is a bubble.

That tension is exactly what Huang was hinting at when he joked that memes about Nvidia holding the world together contain a bit of truth.

For now, Nvidia’s latest quarter confirms that the AI revolution is still very real, even if the path of the stock is anything but smooth. The company is investing and planning as if AI spending will keep compounding. Investors, meanwhile, remain torn between the fear of missing the next great technology wave and the fear of being left holding the bag.

Note: Our call on Nvidia Q3 FY2026 earnings was roughly 90 percent accurate, with revenue, EPS, margins and data center growth all landing only a few percent above our original forecasts: Nvidia Q3 FY2026 Earnings Preview and Prediction: What to expect?

Disclosure: This article does not represent investment advice. The content and materials featured on this page are for educational purposes only.